Troubleshoot Write Conflicts in Optimistic Transactions

This document introduces the reason of and solutions to write conflicts in optimistic transactions.

Before TiDB v3.0.8, TiDB uses the optimistic transaction model by default. In this model, TiDB does not check conflicts during transaction execution. Instead, while the transaction is finally committed, the two-phase commit (2PC) is triggered and TiDB checks write conflicts. If a write conflict exists and the auto-retry mechanism is enabled, then TiDB retries the transaction within limited times. If the retry succeeds or has reached the upper limit on retry times, TiDB returns the result of transaction execution to the client. Therefore, if a lot of write conflicts exist in the TiDB cluster, the duration can be longer.

The reason of write conflicts

TiDB implements its transactions by using the Percolator transaction model. percolator is generally an implementation of 2PC. For the detailed 2PC process, see TiDB Optimistic Transaction Model.

After the client sends a COMMIT request to TiDB, TiDB starts the 2PC process:

- TiDB chooses one key from all keys in the transaction as the primary key of the transaction.

- TiDB sends the

prewriterequest to all the TiKV Regions involved in this commit. TiKV judges whether all keys can preview successfully. - TiDB receives the result that all

prewriterequests are successful. - TiDB gets the

commit_tsfrom PD. - TiDB sends the

commitrequest to the TiKV Region that contains the primary key of the transaction. After TiKV receives thecommitrequest, it checks the validity of the data and clears the locks left in theprewritestage. - After the

commitrequest returns successfully, TiDB returns success to the client.

The write conflict occurs in the prewrite stage. When the transaction finds that another transaction is writing the current key (data.commit_ts > txn.start_ts), a write conflict occurs.

Detect write conflicts

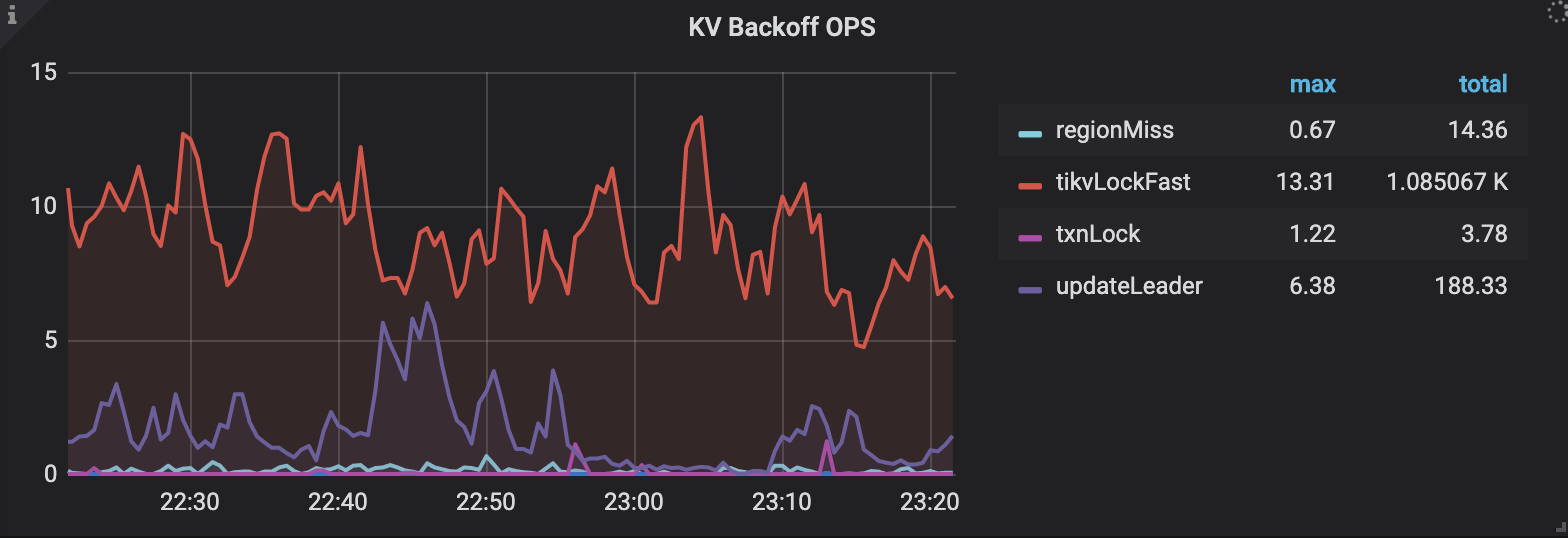

In the TiDB Grafana panel, check the following monitoring metrics under KV Errors:

KV Backoff OPS indicates the count of error messages per second returned by TiKV.

The

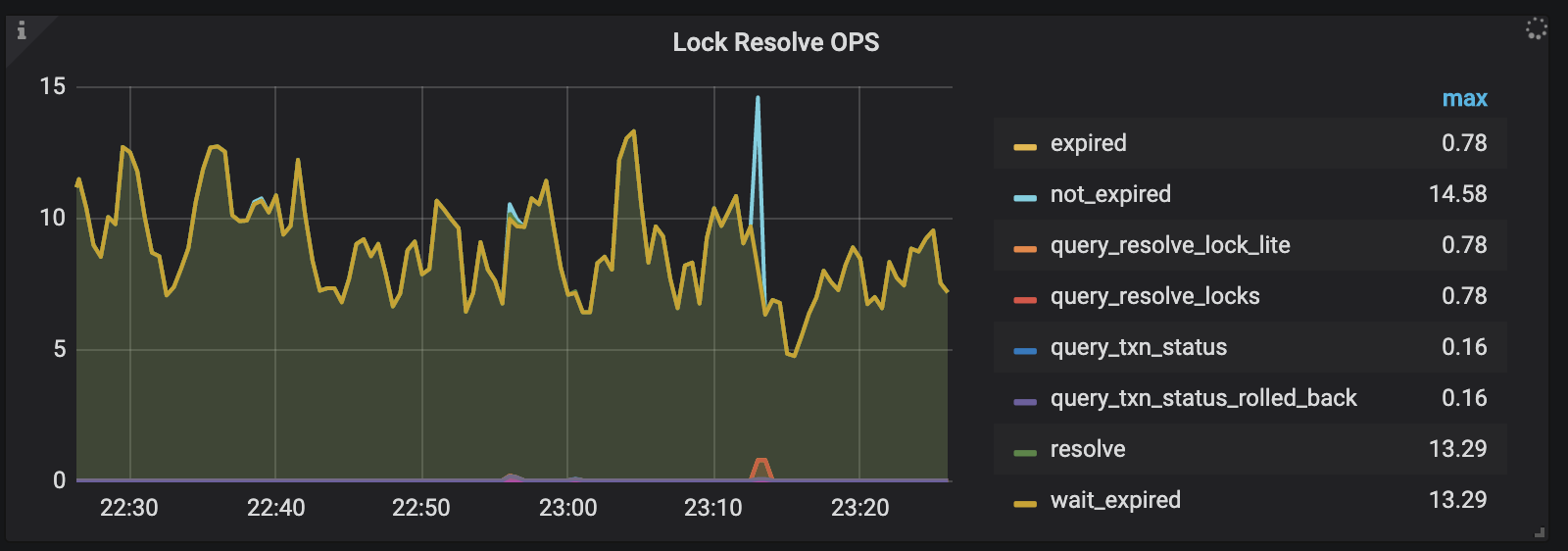

txnlockmetric indicates the write-write conflict. ThetxnLockFastmetric indicates the read-write conflict.Lock Resolve OPS indicates the count of items related to transaction conflicts per second:

not_expiredindicates the TTL of the lock was not expired. The conflict transaction cannot resolve locks until the TTL is expired.wait_expiredindicates that the transaction needs to wait the lock to expire.expiredindicates the TTL of the lock was expired. Then the conflict transaction can resolve this lock.

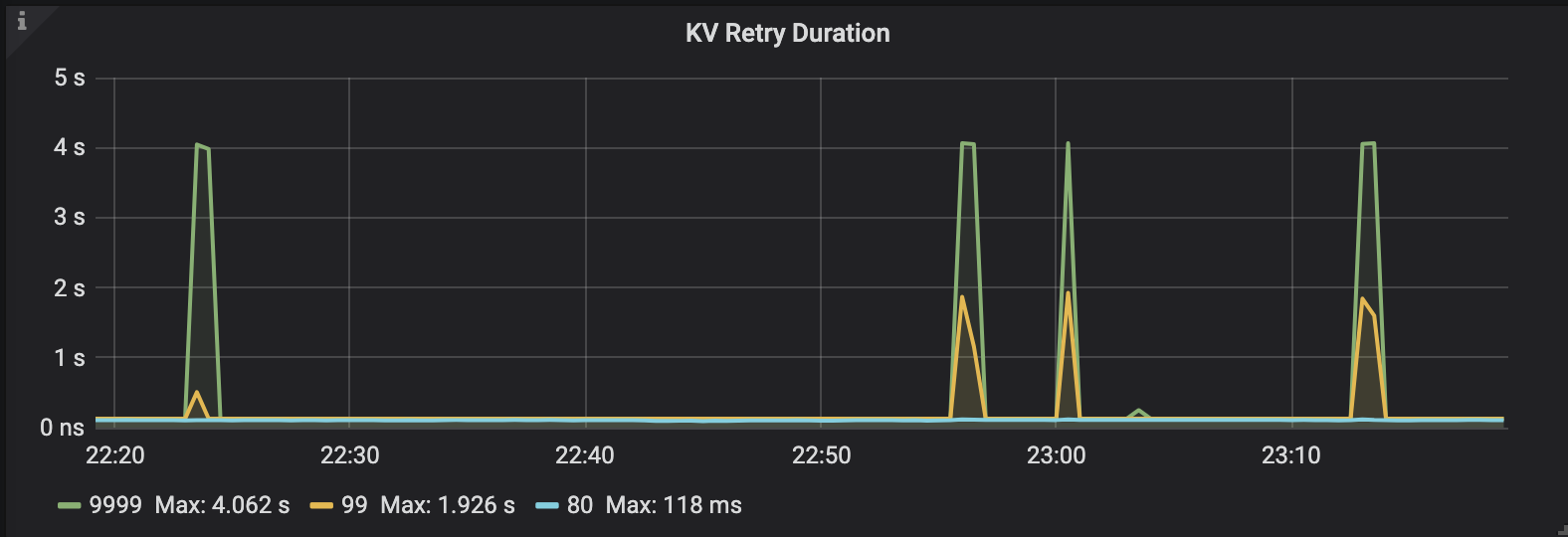

KV Retry Duration indicates the duration of re-sends the KV request:

You can also use [kv:9007]Write conflict as the keywords to search in the TiDB log. The keywords also indicate the write conflict exists in the cluster.

Resolve write conflicts

If many write conflicts exist in the cluster, it is recommended to find out the write conflict key and the reason, and then try to change the application logic to avoid write conflicts. When the write conflict exists in the cluster, you can see the log similar to the following one in the TiDB log file:

[2020/05/12 15:17:01.568 +08:00] [WARN] [session.go:446] ["commit failed"] [conn=3] ["finished txn"="Txn{state=invalid}"] [error="[kv:9007]Write conflict, txnStartTS=416617006551793665, conflictStartTS=416617018650001409, conflictCommitTS=416617023093080065, key={tableID=47, indexID=1, indexValues={string, }} primary={tableID=47, indexID=1, indexValues={string, }} [try again later]"]

The explanation of the log above is as follows:

[kv:9007]Write conflict: indicates the write-write conflict.txnStartTS=416617006551793665: indicates thestart_tsof the current transaction. You can use thepd-ctltool to convertstart_tsto physical time.conflictStartTS=416617018650001409: indicates thestart_tsof the write conflict transaction.conflictCommitTS=416617023093080065: indicates thecommit_tsof the write conflict transaction.key={tableID=47, indexID=1, indexValues={string, }}: indicates the write conflict key.tableIDindicates the ID of the write conflict table.indexIDindicates the ID of write conflict index. If the write conflict key is a record key, the log printshandle=x, indicating which record(row) has a conflict.indexValuesindicates the value of the index that has a conflict.primary={tableID=47, indexID=1, indexValues={string, }}: indicates the primary key information of the current transaction.

You can use the pd-ctl tool to convert the timestamp to readable time:

tiup ctl:<cluster-version> pd -u https://127.0.0.1:2379 tso {TIMESTAMP}

You can use tableID to find the name of the related table:

curl http://{TiDBIP}:10080/db-table/{tableID}

You can use indexID and the table name to find the name of the related index:

SELECT * FROM INFORMATION_SCHEMA.TIDB_INDEXES WHERE TABLE_SCHEMA='{db_name}' AND TABLE_NAME='{table_name}' AND INDEX_ID={indexID};

In addition, in TiDB v3.0.8 and later versions, the pessimistic transaction becomes the default mode. The pessimistic transaction mode can avoid write conflicts during the transaction prewrite stage, so you do not need to modify the application any more. In the pessimistic transaction mode, each DML statement writes a pessimistic lock to the related keys during execution. This pessimistic lock can prevent other transactions from modifying the same keys, thus ensuring no write conflicts exist in the prewrite stage of the transaction 2PC.